#softwares EDA

Explore tagged Tumblr posts

Text

Banimento de software dos EUA afeta Xiaomi, Lenovo e outras gigantes chinesas

Empresas chinesas como Xiaomi e Lenovo, entre outras gigantes da tecnologia, estão enfrentando novos desafios. O governo dos Estados Unidos decidiu proibir o fornecimento de softwares EDA (Electronic Design Automation) para companhias chinesas que constam em uma lista de restrições comerciais. Esses softwares são fundamentais para a criação de chips e circuitos integrados. Com essa nova…

#autossuficiência tecnológica#bloqueio de software#cadeia de semicondutores#guerra comercial#Lenovo e Xiaomi#produção de chips#softwares EDA#tecnologia chinesa

0 notes

Text

Well, my meds are on backorder and I think the barometric pressure swing is screwing with me too, so please welcome “need to keep hands occupied during business calls” mspaint.exe RJ

ugh I just want to feel like I’m not under 10 feet of water and my head to not be stuffed with cotton balls

#I keep my first Wacom here at the office but installing any other graphics software on my work computer would probably raise some eyebrows#tablet is good for creating exhibits#adhd problems#fallout#fallout 4#fo4#maccready#rj maccready#robert joseph maccready#eda draws

41 notes

·

View notes

Text

Discover the Synopsys stock price forecast for 2025–2029, with insights into financial performance, AI-driven growth, and investment tips. #Synopsys #SNPS #SNPSstock #stockpriceforecast #EDAsoftware #semiconductorIP #AIchipdesign #stockinvestment #financialperformance #Ansysacquisition #sharebuyback

#AI chip design#Ansys acquisition#Best semiconductor stocks to buy#EDA software#Financial performance#Investment#Investment Insights#Is Synopsys a good investment#semiconductor IP#share buyback#SNPS#SNPS stock#SNPS stock analysis 2025–2029#Stock Forecast#Stock Insights#stock investment#Stock Price Forecast#Synopsys#Synopsys AI-driven chip design#Synopsys Ansys acquisition impact#Synopsys financial performance 2024#Synopsys share buyback program#Synopsys stock#Synopsys stock buy or sell#Synopsys stock price forecast 2025#Synopsys stock price target 2025

0 notes

Text

Electronic design automation (EDA) workloads: using IBM Cloud

A market sector known as Electronic design automation(EDA) is made up of hardware, software, and services that are used to help define, plan, design, implement, verify, and then manufacture semiconductor devices (or chips). Foundries or fabs that produce semiconductors are the main suppliers of this service.

EDA solutions are essential in three aspects even if they are not directly engaged in the production of chips:

To make sure the semiconductor manufacturing process produces the necessary performance and density, EDA tools are employed in its design and validation.

EDA tools are used to confirm that a design satisfies every criterion related to the manufacturing process. We call this area of study “design for manufacturability” (DFM).

The need to track the chip’s performance from the point of manufacture to field deployment is becoming more and more pressing. The term “silicon lifecycle management” (SLM) describes this third use.

EDA HPC environments are constantly expanding in size because to increased competition, the need to get products to market more quickly, and the growing demands for computers to handle the more fidelity simulations and modeling workloads. Businesses are trying to figure out how to effectively leverage technologies like hybrid cloud, accelerators, and containerization to obtain a competitive computing advantage.

The integrated circuit design and Electronic design automation software

In order to shape and validate state-of-the-art semiconductor chips, optimize their manufacturing processes, and guarantee that improvements in performance and density are reliably and consistently realized, Electronic design automation (EDA) software is essential.

For startups and small enterprises looking to enter this industry, the costs of purchasing and maintaining the computer environments, tools, and IT know-how required to use Electronic design automation tools pose a substantial obstacle. In addition, these expenses continue to be a major worry for well-established companies that use EDA designs. A key component of success is meeting deadlines, and chip designers and manufacturers are under tremendous pressure to introduce new chip generations with improved density, dependability, and efficiency.

Read more on Govindhtech.com

0 notes

Text

Giving Tuesday – KiCad open-source design #GivingTuesday 💻🔧🌍❤️🤝

KiCad is an open-source software suite for electronic design automation (EDA), enabling users to design schematics and printed circuit boards (PCBs). KiCad is a great, free, open tool for creating complex designs, from hobbyist projects to professional-grade hardware. As an open-source initiative, KiCad promotes accessibility and collaboration, making advanced EDA tools freely available. Supporting KiCad helps the development of features, bug fixes, and community-driven improvements, empowering engineers, educators, and hobbyists worldwide. Consider donating to KiCad to strengthen the open-source hardware community and help make high-quality EDA tools accessible to everyone.

In the past year, they posted this nifty chart that shows what your support can help accomplish.

If KiCad is valuable to you, please consider donating to help make it even better.

#kicad#kicadpcb#electronics#eda#schematic#pcbdesign#pcblayout#opensource#edatools#openhardware#donatetoday#engineeringdesign#communitysupport#openedsystems#opensourcecommunity#pcbtools#givingback#generositymatters#techforgood#makercommunity#electronicsdesign#designsoftware#kicaddonation#hardwaredesign#opensourceinitiative#techcommunity#sharekindness

11 notes

·

View notes

Note

🖊️ One for Val because she intrigued me

Oooh hi!! Ty for asking about Val because I do love her haha <3

So something I'm working on for Val is an elevated form of shapeshifting magic.

A long time ago, I read on either the DA wiki or somewhere else that a long time ago, powerful mages could shapeshift into mythical beasts instead of just regular animals. And I mean, that tracks right - Flemythal could turn into a Dragon, so there is a basis for this.

I think since Val puts a majority of her focus on shapeshifting magic, it's not impossible to think that she could learn to transform into something more than a common wolf or a big spider.

Val most often takes the form of an owl. She actually finds it a more apt representation of what she does rather than a crow, despite being A Crow (lol). Owls are cat software running on bird hardware - they are some of the best avian predators around (insane grip strength in terms of pounds per square inch, stereoscopic hearing, night-vision, wings tailored to fly SILENTLY- you won't see them coming until it's too late).

Val, like many of our Rooks, is an expert in thinking outside the box. What if one could stop the transformation process half-way and retain some animal features in a human form, or just turn into a weird/almost demonic half-bird half-human ... thing. Or just a more demonic version of that animal (like the demon bear that Sloth looks like in DA:O in Mage Origin). I have this HC that she experiments with herself despite literally everyone telling her not to - it's unexplored territory. Who knows what could happen. Gurl, you could get STUCK like that forever for all you know!!

Is Val going to listen? Absolutely not. She's going to push herself further and further, so that if any threat like the Gods comes knocking in Thedas' door she can spring into action. She and Fenris don't plan on retiring into obscurity after the events of DATV. There's a lot of corruption to fight even though the Blight is over and the Gods are dead.

Current inspo is a some kind of hybrid between Wan Shi Tong from ATLA and Eda the Owl Lady from Owl House

"BE NOT AFRAID, FENRIS"

#asks#championofthefade#I've talked about this before and i do still want to do this#writing it out just keeps it in my mind as a reminder like girl dont forget u cursed one of ur oc's to look like a demon owl#get on that#oc: val de riva

3 notes

·

View notes

Text

Oh no the drunk pr0n bots are catching up to us!

They know promises of sex won't work on an asexual so they're like "Hi I'm FRIENDLY :D let's have some FRIENDLY fun!! I promise the peach and no under 18 are because we'll have some peach flavoured drugs :D"

For real though what monstrosity of a software is generating these drunk sentences. What code are they written in. WHAT AM I SUPPOSED TO MAKE OUT OF THIS, PR0N BOT EDA???

23 notes

·

View notes

Text

Essential Predictive Analytics Techniques

With the growing usage of big data analytics, predictive analytics uses a broad and highly diverse array of approaches to assist enterprises in forecasting outcomes. Examples of predictive analytics include deep learning, neural networks, machine learning, text analysis, and artificial intelligence.

Predictive analytics trends of today reflect existing Big Data trends. There needs to be more distinction between the software tools utilized in predictive analytics and big data analytics solutions. In summary, big data and predictive analytics technologies are closely linked, if not identical.

Predictive analytics approaches are used to evaluate a person's creditworthiness, rework marketing strategies, predict the contents of text documents, forecast weather, and create safe self-driving cars with varying degrees of success.

Predictive Analytics- Meaning

By evaluating collected data, predictive analytics is the discipline of forecasting future trends. Organizations can modify their marketing and operational strategies to serve better by gaining knowledge of historical trends. In addition to the functional enhancements, businesses benefit in crucial areas like inventory control and fraud detection.

Machine learning and predictive analytics are closely related. Regardless of the precise method, a company may use, the overall procedure starts with an algorithm that learns through access to a known result (such as a customer purchase).

The training algorithms use the data to learn how to forecast outcomes, eventually creating a model that is ready for use and can take additional input variables, like the day and the weather.

Employing predictive analytics significantly increases an organization's productivity, profitability, and flexibility. Let us look at the techniques used in predictive analytics.

Techniques of Predictive Analytics

Making predictions based on existing and past data patterns requires using several statistical approaches, data mining, modeling, machine learning, and artificial intelligence. Machine learning techniques, including classification models, regression models, and neural networks, are used to make these predictions.

Data Mining

To find anomalies, trends, and correlations in massive datasets, data mining is a technique that combines statistics with machine learning. Businesses can use this method to transform raw data into business intelligence, including current data insights and forecasts that help decision-making.

Data mining is sifting through redundant, noisy, unstructured data to find patterns that reveal insightful information. A form of data mining methodology called exploratory data analysis (EDA) includes examining datasets to identify and summarize their fundamental properties, frequently using visual techniques.

EDA focuses on objectively probing the facts without any expectations; it does not entail hypothesis testing or the deliberate search for a solution. On the other hand, traditional data mining focuses on extracting insights from the data or addressing a specific business problem.

Data Warehousing

Most extensive data mining projects start with data warehousing. An example of a data management system is a data warehouse created to facilitate and assist business intelligence initiatives. This is accomplished by centralizing and combining several data sources, including transactional data from POS (point of sale) systems and application log files.

A data warehouse typically includes a relational database for storing and retrieving data, an ETL (Extract, Transfer, Load) pipeline for preparing the data for analysis, statistical analysis tools, and client analysis tools for presenting the data to clients.

Clustering

One of the most often used data mining techniques is clustering, which divides a massive dataset into smaller subsets by categorizing objects based on their similarity into groups.

When consumers are grouped together based on shared purchasing patterns or lifetime value, customer segments are created, allowing the company to scale up targeted marketing campaigns.

Hard clustering entails the categorization of data points directly. Instead of assigning a data point to a cluster, soft clustering gives it a likelihood that it belongs in one or more clusters.

Classification

A prediction approach called classification involves estimating the likelihood that a given item falls into a particular category. A multiclass classification problem has more than two classes, unlike a binary classification problem, which only has two types.

Classification models produce a serial number, usually called confidence, that reflects the likelihood that an observation belongs to a specific class. The class with the highest probability can represent a predicted probability as a class label.

Spam filters, which categorize incoming emails as "spam" or "not spam" based on predetermined criteria, and fraud detection algorithms, which highlight suspicious transactions, are the most prevalent examples of categorization in a business use case.

Regression Model

When a company needs to forecast a numerical number, such as how long a potential customer will wait to cancel an airline reservation or how much money they will spend on auto payments over time, they can use a regression method.

For instance, linear regression is a popular regression technique that searches for a correlation between two variables. Regression algorithms of this type look for patterns that foretell correlations between variables, such as the association between consumer spending and the amount of time spent browsing an online store.

Neural Networks

Neural networks are data processing methods with biological influences that use historical and present data to forecast future values. They can uncover intricate relationships buried in the data because of their design, which mimics the brain's mechanisms for pattern recognition.

They have several layers that take input (input layer), calculate predictions (hidden layer), and provide output (output layer) in the form of a single prediction. They are frequently used for applications like image recognition and patient diagnostics.

Decision Trees

A decision tree is a graphic diagram that looks like an upside-down tree. Starting at the "roots," one walks through a continuously narrowing range of alternatives, each illustrating a possible decision conclusion. Decision trees may handle various categorization issues, but they can resolve many more complicated issues when used with predictive analytics.

An airline, for instance, would be interested in learning the optimal time to travel to a new location it intends to serve weekly. Along with knowing what pricing to charge for such a flight, it might also want to know which client groups to cater to. The airline can utilize a decision tree to acquire insight into the effects of selling tickets to destination x at price point y while focusing on audience z, given these criteria.

Logistics Regression

It is used when determining the likelihood of success in terms of Yes or No, Success or Failure. We can utilize this model when the dependent variable has a binary (Yes/No) nature.

Since it uses a non-linear log to predict the odds ratio, it may handle multiple relationships without requiring a linear link between the variables, unlike a linear model. Large sample sizes are also necessary to predict future results.

Ordinal logistic regression is used when the dependent variable's value is ordinal, and multinomial logistic regression is used when the dependent variable's value is multiclass.

Time Series Model

Based on past data, time series are used to forecast the future behavior of variables. Typically, a stochastic process called Y(t), which denotes a series of random variables, are used to model these models.

A time series might have the frequency of annual (annual budgets), quarterly (sales), monthly (expenses), or daily (daily expenses) (Stock Prices). It is referred to as univariate time series forecasting if you utilize the time series' past values to predict future discounts. It is also referred to as multivariate time series forecasting if you include exogenous variables.

The most popular time series model that can be created in Python is called ARIMA, or Auto Regressive Integrated Moving Average, to anticipate future results. It's a forecasting technique based on the straightforward notion that data from time series' initial values provides valuable information.

In Conclusion-

Although predictive analytics techniques have had their fair share of critiques, including the claim that computers or algorithms cannot foretell the future, predictive analytics is now extensively employed in virtually every industry. As we gather more and more data, we can anticipate future outcomes with a certain level of accuracy. This makes it possible for institutions and enterprises to make wise judgments.

Implementing Predictive Analytics is essential for anybody searching for company growth with data analytics services since it has several use cases in every conceivable industry. Contact us at SG Analytics if you want to take full advantage of predictive analytics for your business growth.

2 notes

·

View notes

Text

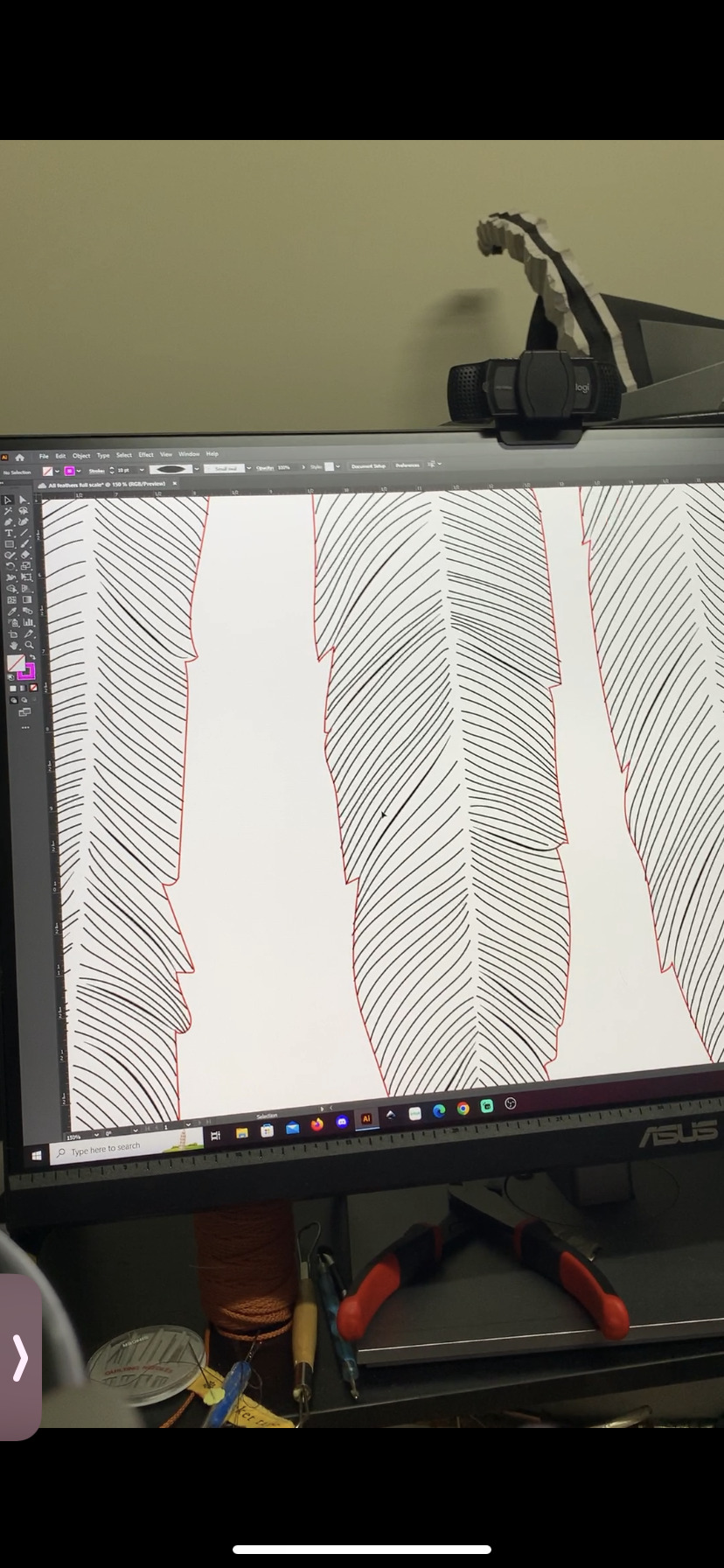

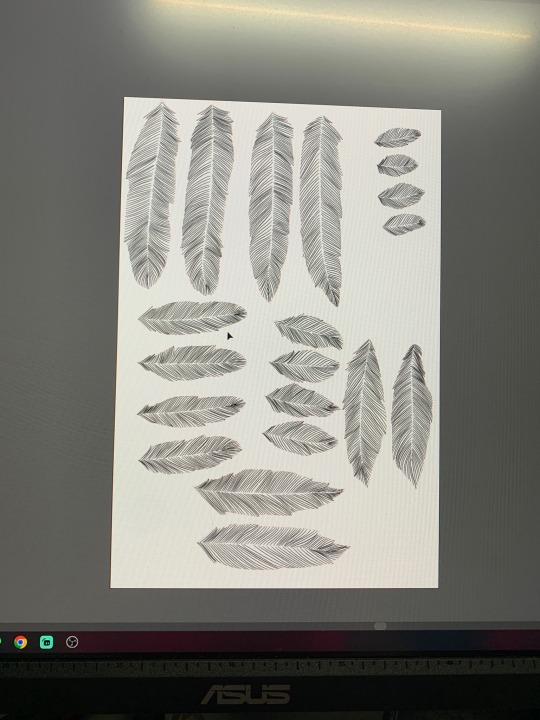

Harpy Eda Progress (random stuff because wow this project has been pure chaos)

Progress has been made but it’s all over the place since I’m procrastinating willingly on some things and forced to wait on others. So here is a bit progress update of random stuff

1. Wig

I bought a lace front in gray from Arda Wigs and one of their long white clip-on ponytails. I crimped both the ponytail and the wig, dismantled the ponytail’s mesh base, and took out the white wefts to glue them onto the main wig. Then I went back and teased almost the entire wig and steamed it to tone down the flyaways. I actually went through the spiking process twice because I hated the first version and didn’t account for the ears. (First version on the left, semi final version on the right)

2. Ears

I have tiny baby ears so I wasn’t sure I could find prosthetics in my size that weren’t custom made (aka expensive). So naturally I decided to spend a lot of time- and some money- to make them myself. I went through the long process of live-casting my ears with alginate (the goopy stuff they use to take tooth impressions at the dentist) and made a plaster cast of my small baby ears. I did a sculpt with Monster Clay and did a second alginate mold and plaster cast to have a nice base for the final latex ears. For the actual ears, these are liquid latex tinted with white acrylic paint and they are brushed/dabbed onto the base ears in thin layers. After 15-20 layers, I have to apply a stupid amount of baby powder to keep the latex from sticking to itself, and then I can carefully peel them off the plaster masters. They just get glued on with Prosaide afterwards and are pretty comfortable to wear.

3. Feathers

I have been in Adobe Illustrator hell creating feather SVGs for the laser cutter software Lightburn so that I can have a machine cut EVA foam feathers for me while I’m busy avoiding my corset mock-up by doing a full fabric stash inventory. There are five feather sizes with four variants of shapes in each size. Every feather has a red outline and dozens of detail texture lines drawn as tapered lines. These are plopped onto appropriately sized art boards that match the cutting area of the laser cutter which is 15x15 inches. Adobe also exports SVGs at 72 ppi (pixels per inch) and Lightburn treats everything as 96 ppi so I had to rescale everything. (Yes, me and the owner of the laser cutter found this through trial and error). The end result is a gorgeous bunch of feathers that I did not have to engrave by hand. There’s currently about 120 out of over 400 feathers cut so far

Everything else is still in early stages or waiting to be done after something else finishes (like the dress or corset that will support the wings’ backplate)

3 notes

·

View notes

Text

Apple hints at AI integration in chip design process

New Post has been published on https://thedigitalinsider.com/apple-hints-at-ai-integration-in-chip-design-process/

Apple hints at AI integration in chip design process

Apple is beginning to use generative artificial intelligence to help design the chips that power its devices. The company’s hardware chief, Johny Srouji, made that clear during a speech last month in Belgium. He said Apple is exploring AI as a way to save time and reduce complexity in chip design, especially as chips grow more advanced.

“Generative AI techniques have a high potential in getting more design work in less time, and it can be a huge productivity boost,” Srouji said. He was speaking while receiving an award from Imec, a semiconductor research group that works with major chipmakers around the world.

He also mentioned how much Apple depends on third-party software from electronic design automation (EDA) companies. The tools are key to developing the company’s chips. Synopsys and Cadence, two of the biggest EDA firms, are both working to add more AI into their design tools.

From the A4 to Vision Pro: A design timeline

Srouji’s remarks offered a rare glimpse into Apple’s internal process. He walked through Apple’s journey, starting with the A4 chip in the iPhone 4, launched in 2010. Since then, Apple has built a range of custom chips, including those used in the iPad, Apple Watch, and Mac. The company also developed the chips that run the Vision Pro headset.

He said that while hardware is important, the real challenge lies in design. Over time, chip design has become more complex and now requires tight coordination between hardware and software. Srouji said AI has the potential to make that coordination faster and more reliable.

Why Apple is working with Broadcom on server chips

In late 2024, Apple began a quiet project with chip supplier Broadcom to develop its first AI server chip. The processor, known internally as “Baltra,” is said to be part of Apple’s larger plan to support more AI services on the back end. That includes features tied to Apple Intelligence, the company’s new suite of AI tools for iPhones, iPads, and Macs.

Baltra is expected to power Apple’s private cloud infrastructure. Unlike devices that run AI locally, this chip will sit in servers, likely inside Apple’s own data centres. It would help handle heavier AI workloads that are too much for on-device chips.

On-device vs. cloud: Apple’s AI infrastructure split

Apple is trying to balance user privacy with the need for more powerful AI features. Some of its AI tools will run directly on devices. Others will use server-based chips like Baltra. The setup is part of what Apple calls “Private Cloud Compute.”

The company says users won’t need to sign in, and data will be kept anonymous. But the approach depends on having a solid foundation of hardware – both in devices and in the cloud. That’s where chips like Baltra come in. Building its own server chips would give Apple more control over performance, security, and integration.

No backup plan: A pattern in Apple’s hardware strategy

Srouji said Apple is used to taking big hardware risks. When the company moved its Mac lineup from Intel to Apple Silicon in 2020, it didn’t prepare a backup plan.

“Moving the Mac to Apple Silicon was a huge bet for us. There was no backup plan, no split-the-lineup plan, so we went all in, including a monumental software effort,” he said.

The same mindset now seems to apply to Apple’s AI chips. Srouji said the company is willing to go all in again, trusting that AI tools can make the chip design process faster and more precise.

EDA firms like Synopsys and Cadence shape the roadmap

While Apple designs its own chips, it depends heavily on tools built by other companies. Srouji mentioned how important EDA vendors are to Apple’s chip efforts. Cadence and Synopsys are both updating their software to include more AI features.

Synopsys recently introduced a product called AgentEngineer. It uses AI agents to help chip designers automate repetitive tasks and manage complex workflows. The idea is to let human engineers focus on higher-level decisions. The changes could make it easier for companies like Apple to speed up chip development.

Cadence is also expanding its AI offerings. Both firms are in a race to meet the needs of tech companies that want faster and cheaper ways to design chips.

What comes next: Talent, testing, and production

As Apple adds more AI into its chip design, it will need to bring in new kinds of talent. That includes engineers who can work with AI tools, as well as people who understand both hardware and machine learning.

At the same time, chips like Baltra still need to be tested and manufactured. Apple will likely continue to rely on partners like TSMC for chip production. But the design work is moving more in-house, and AI is playing a bigger role in that shift.

How Apple integrates these AI-designed chips into products and services remains to be seen. What’s clear is that the company is trying to tighten its control over the full stack – hardware, software, and now the infrastructure that powers AI.

#2024#ADD#agents#ai#AI AGENTS#AI chips#AI Infrastructure#AI integration#ai tools#apple#apple intelligence#Apple Watch#approach#artificial#Artificial Intelligence#automation#backup#broadcom#Building#cadence#challenge#chip#Chip Design#chip production#chips#Cloud#cloud infrastructure#Companies#complexity#data

0 notes

Text

Top Jobs You Can Land After Completing a Machine Learning Course in London

As technology continues to reshape industries, Machine Learning (ML) has become one of the most sought-after skills globally. Whether you're a student looking to break into tech or a professional aiming to future-proof your career, enrolling in a Machine Learning Course in London opens the door to some of the most lucrative and high-impact job opportunities available today.

With London's status as a global tech hub—home to world-class universities, tech startups, and multinational firms—completing your ML training here can give you a competitive edge in the job market. In this article, we’ll explore the top career paths you can pursue after completing a Machine Learning course in London, the responsibilities involved, average salary expectations, and the key skills needed for each role.

Why Choose London for Machine Learning?

London is one of the top cities in Europe—and the world—for AI and ML talent development. By enrolling in a Machine Learning course in London, you gain access to:

Renowned instructors from leading tech firms and academia

Real-world, project-based learning experiences

A growing ecosystem of AI-driven startups, enterprises, and government initiatives

Career fairs, internships, and job placement support

Once you complete your ML certification, you'll be ready to take on a wide range of roles across industries such as finance, healthcare, e-commerce, logistics, and cybersecurity.

1. Machine Learning Engineer

Role Overview: As a Machine Learning Engineer, you'll design, build, and deploy intelligent systems that learn from data and make predictions. This is one of the most in-demand and high-paying roles in the AI domain.

Responsibilities:

Developing and optimizing ML algorithms

Building pipelines for data collection and preprocessing

Training models using large datasets

Deploying ML models into production environments

Collaborating with software engineers and data scientists

Average Salary in London: £60,000 – £90,000 per year Top Employers: Google DeepMind, Revolut, Babylon Health, Ocado Technology

2. Data Scientist

Role Overview: After completing a Machine Learning Course in London, many professionals step into the role of a Data Scientist. This position blends statistical analysis, programming, and ML to uncover insights from data and solve business problems.

Responsibilities:

Performing exploratory data analysis (EDA)

Building predictive models

Designing A/B testing strategies

Presenting insights to stakeholders using data visualization

Leveraging ML models to drive business growth

Average Salary in London: £50,000 – £80,000 per year Top Employers: Barclays, Deloitte, Sky, Expedia Group

3. AI Research Scientist

Role Overview: If you're academically inclined and interested in pushing the boundaries of what's possible with AI, you can pursue a career as an AI Research Scientist.

Responsibilities:

Conducting original research in machine learning, deep learning, NLP, etc.

Publishing papers in top AI conferences (e.g., NeurIPS, ICML, CVPR)

Experimenting with cutting-edge neural network architectures

Collaborating with academic institutions and R&D labs

Average Salary in London: £70,000 – £120,000+ Top Employers: Google DeepMind, Meta AI, University College London, Microsoft Research

4. Data Analyst (with ML Skills)

Role Overview: Although Data Analysts focus more on interpretation than model building, those with ML skills can work on advanced data-driven insights, automation, and anomaly detection.

Responsibilities:

Interpreting large data sets to identify trends and patterns

Creating dashboards using tools like Power BI or Tableau

Automating reports and decision-making processes with ML

Supporting business teams with data-backed recommendations

Average Salary in London: £40,000 – £60,000 per year Top Employers: HSBC, Tesco, BBC, Deliveroo

5. NLP Engineer (Natural Language Processing)

Role Overview: After a Machine Learning course in London that includes NLP modules, you can specialize as an NLP Engineer—a role focused on developing systems that understand and process human language.

Responsibilities:

Building chatbots and voice assistants

Text classification, sentiment analysis, and topic modeling

Training transformer models like BERT or GPT

Working with unstructured text data from customer reviews, support tickets, etc.

Average Salary in London: £55,000 – £90,000 per year Top Employers: Accenture, Amazon Alexa, BBC R&D, Thought Machine

6. Computer Vision Engineer

Role Overview: Computer Vision Engineers develop AI systems that can "see" and interpret visual inputs like images and videos—essential in fields like healthcare imaging, autonomous driving, and augmented reality.

Responsibilities:

Image classification and object detection

Working with OpenCV, YOLO, TensorFlow, and PyTorch

Developing AR/VR experiences

Enhancing security systems with facial recognition

Average Salary in London: £60,000 – £100,000 per year Top Employers: Tractable AI, Dyson, Arm, Magic Leap

7. Robotics Engineer with ML Focus

Role Overview: If you’re interested in automation and physical systems, you can work as a Robotics Engineer applying machine learning to enhance robotic perception, planning, and control.

Responsibilities:

Integrating ML models with hardware and IoT devices

Working on self-driving cars or robotic arms

Developing autonomous drones or warehouse robots

Sensor fusion and real-time decision-making systems

Average Salary in London: £60,000 – £95,000 per year Top Employers: Ocado Robotics, Automata, Dyson, Starship Technologies

8. Business Intelligence (BI) Developer

Role Overview: BI developers with machine learning knowledge are able to move beyond reporting and leverage predictive analytics for data-driven decision-making.

Responsibilities:

Building data pipelines and ETL processes

Creating predictive dashboards

Working with SQL, Python, and BI tools

Advising decision-makers based on trends and forecasts

Average Salary in London: £45,000 – £65,000 per year Top Employers: NHS, Unilever, KPMG, Sky

Final Thoughts

A Machine Learning Course in London is more than just an academic credential — it’s a launchpad into a future-facing career. With the UK tech industry booming and London standing as a major AI innovation center, your training here will equip you with both skills and opportunities.

Whether your interests lie in coding intelligent systems, analyzing data, building chatbots, or managing AI products, the job landscape post-course is rich and expanding. The key is to build a strong portfolio, stay curious, and leverage London’s vibrant tech ecosystem to connect with employers and collaborators.

#Best Data Science Courses in London#Artificial Intelligence Course in London#Data Scientist Course in London#Machine Learning Course in London

0 notes

Text

Unlocking Data Science's Potential: Transforming Data into Perceptive Meaning

Data is created on a regular basis in our digitally connected environment, from social media likes to financial transactions and detection labour. However, without the ability to extract valuable insights from this enormous amount of data, it is not very useful. Data insight can help you win in that situation. Online Course in Data Science It is a multidisciplinary field that combines computer knowledge, statistics, and subject-specific expertise to evaluate data and provide useful perception. This essay will explore the definition of data knowledge, its essential components, its significance, and its global transubstantiation diligence.

Understanding Data Science: To find patterns and shape opinions, data wisdom essentially entails collecting, purifying, testing, and analysing large, complicated datasets. It combines a number of fields.

Statistics: To establish predictive models and derive conclusions.

Computer intelligence: For algorithm enforcement, robotization, and coding.

Sphere moxie: To place perceptivity in a particular field of study, such as healthcare or finance.

It is the responsibility of a data scientist to pose pertinent queries, handle massive amounts of data effectively, and produce findings that have an impact on operations and strategy.

The Significance of Data Science

1. Informed Decision Making: To improve the stoner experience, streamline procedures, and identify emerging trends, associations rely on data-driven perception.

2. Increased Effectiveness: Businesses can decrease manual labour by automating operations like spotting fraudulent transactions or managing AI-powered customer support.

3. Acclimatised Gests: Websites like Netflix and Amazon analyse user data to provide suggestions for products and verified content.

4. Improvements in Medicine: Data knowledge helps with early problem diagnosis, treatment development, and bodying medical actions.

Essential Data Science Foundations:

1. Data Acquisition & Preparation: Databases, web scraping, APIs, and detectors are some sources of data. Before analysis starts, it is crucial to draw the data, correct offences, eliminate duplicates, and handle missing values.

2. Exploratory Data Analysis (EDA): EDA identifies patterns in data, describes anomalies, and comprehends the relationships between variables by using visualisation tools such as Seaborn or Matplotlib.

3. Modelling & Machine Learning: By using techniques like

Retrogression: For predicting numerical patterns.

Bracket: Used for data sorting (e.g., spam discovery).

For group segmentation (such as client profiling), clustering is used.

Data scientists create models that automate procedures and predict problems. Enrol in a reputable software training institution's Data Science course.

4. Visualisation & Liar: For stakeholders who are not technical, visual tools such as Tableau and Power BI assist in distilling complex data into understandable, captivating dashboards and reports.

Data Science Activities Across Diligence:

1. Online shopping

personalised recommendations for products.

Demand-driven real-time pricing schemes.

2. Finance & Banking

identifying deceptive conditioning.

trading that is automated and powered by predictive analytics.

3. Medical Care

tracking the spread of complaints and formulating therapeutic suggestions.

using AI to improve medical imaging.

4. Social Media

assessing public opinion and stoner sentiment.

curation of feeds and optimisation of content.

Typical Data Science Challenges:

Despite its potential, data wisdom has drawbacks.

Ethics & Sequestration: Preserving stoner data and preventing algorithmic prejudice.

Data Integrity: Inaccurate perception results from low-quality data.

Scalability: Pall computing and other high-performance structures are necessary for managing large datasets.

The Road Ahead:

As artificial intelligence advances, data wisdom will remain a crucial motorist of invention. unborn trends include :

AutoML – Making machine literacy accessible to non-specialists.

Responsible AI – icing fairness and translucency in automated systems.

Edge Computing – Bringing data recycling near to the source for real- time perceptivity.

Conclusion:

Data wisdom is reconsidering how businesses, governments, and healthcare providers make opinions by converting raw data into strategic sapience. Its impact spans innumerous sectors and continues to grow. With rising demand for professed professionals, now is an ideal time to explore this dynamic field.

0 notes

Text

Master Data Like a Pro: Enroll in the 2025 R Programming Bootcamp for Absolute Beginners!!

Are you curious about how companies turn numbers into real-world decisions? Have you ever looked at graphs or reports and wondered how people make sense of so much data?

If that sounds like you, then you’re about to discover something that could completely change the way you think about numbers — and your career. Introducing the 2025 R Programming Bootcamp for Absolute Beginners: your all-in-one launchpad into the exciting world of data science and analytics.

This isn’t just another course. It’s a bootcamp built from the ground up to help beginners like you master R programming — the language trusted by data scientists, statisticians, and analysts across the world.

Let’s break it down and see what makes this course the go-to starting point for your R journey in 2025.

Why Learn R Programming in 2025?

Before we dive into the bootcamp itself, let’s answer the big question: Why R?

Here’s what makes R worth learning in 2025:

Data is the new oil — and R is your refinery.

It’s free and open-source, meaning no costly software licenses.

R is purpose-built for data analysis, unlike general-purpose languages.

It’s widely used in academia, government, and corporate settings.

With the rise of AI, data literacy is no longer optional — it’s essential.

In short: R is not going anywhere. In fact, it’s only growing in demand.

Whether you want to become a data scientist, automate your reports, analyze customer trends, or even enter into machine learning, R is one of the best tools you can have under your belt.

What Makes This R Bootcamp a Perfect Fit for Beginners?

There are plenty of R programming tutorials out there. So why should you choose the 2025 R Programming Bootcamp for Absolute Beginners?

Because this bootcamp is built with you in mind — the total beginner.

✅ No coding experience? No problem. This course assumes zero background in programming. It starts from the very basics and gradually builds your skills.

✅ Hands-on learning. You won’t just be watching videos. You’ll be coding along with real exercises and practical projects.

✅ Step-by-step explanations. Every topic is broken down into easy-to-understand segments so you’re never lost or overwhelmed.

✅ Real-world applications. From day one, you’ll work with real data and solve meaningful problems — just like a real data analyst would.

✅ Lifetime access & updates. Once you enroll, you get lifetime access to the course, including any future updates or new content added in 2025 and beyond.

Here's What You'll Learn in This R Bootcamp

Let’s take a sneak peek at what you’ll walk away with:

1. The Foundations of R

Installing R and RStudio

Understanding variables, data types, and basic operators

Writing your first R script

2. Data Structures in R

Vectors, matrices, lists, and data frames

Indexing and subsetting data

Data importing and exporting (CSV, Excel, JSON, etc.)

3. Data Manipulation Made Easy

Using dplyr to filter, select, arrange, and group data

Transforming messy datasets into clean, analysis-ready formats

4. Data Visualization with ggplot2

Creating stunning bar plots, line charts, histograms, and more

Customizing themes, labels, and layouts

Communicating insights visually

5. Exploratory Data Analysis (EDA)

Finding patterns and trends

Generating summary statistics

Building intuition from data

6. Basic Statistics & Data Modeling

Mean, median, standard deviation, correlation

Simple linear regression

Introduction to classification models

7. Bonus Projects

Build dashboards

Analyze customer behavior

Create a mini machine-learning pipeline

And don’t worry — everything is taught in plain English with real-world examples and analogies. This is not just learning to code; it’s learning to think like a data professional.

Who Should Take This Course?

If you’re still wondering whether this bootcamp is right for you, let’s settle that.

You should definitely sign up if you are:

✅ A student looking to boost your resume with data skills ✅ A career switcher wanting to break into analytics or data science ✅ A marketer or business professional aiming to make data-driven decisions ✅ A freelancer wanting to add analytics to your skill set ✅ Or just someone who loves to learn and try something new

In short: if you’re a curious beginner who wants to learn R the right way — this course was made for you.

Real Success Stories from Learners Like You

Don’t just take our word for it. Thousands of beginners just like you have taken this course and found incredible value.

"I had zero background in programming or data analysis, but this course made everything click. The instructor was clear, patient, and made even the complicated stuff feel simple. Highly recommend!" — ★★★★★ "I used this bootcamp to prepare for my first data internship, and guess what? I landed the role! The hands-on projects made all the difference." — ★★★★★

How R Programming Can Transform Your Career in 2025

Here’s where things get really exciting.

With R under your belt, here are just a few of the opportunities that open up for you:

Data Analyst (average salary: $65K–$85K)

Business Intelligence Analyst

Market Researcher

Healthcare Data Specialist

Machine Learning Assistant or Intern

And here’s the kicker: even if you don’t want a full-time data job, just knowing how to work with data makes you more valuable in almost any field.

In 2025 and beyond, data skills are the new power skills.

Why Choose This Bootcamp Over Others?

It’s easy to get lost in a sea of online courses. But the 2025 R Programming Bootcamp for Absolute Beginners stands out for a few key reasons:

Updated content for 2025 standards and tools

Beginner-first mindset — no jargon, no skipping steps

Interactive practice with feedback

Community support and Q&A access

Certificate of completion to boost your resume or LinkedIn profile

This isn’t just a video series — it’s a true bootcamp experience, minus the high cost.

Common Myths About Learning R (and Why They’re Wrong)

Let’s bust some myths, shall we?

Myth #1: R is too hard for beginners. Truth: This bootcamp breaks everything down step by step. If you can use Excel, you can learn R.

Myth #2: You need a math background. Truth: While math helps, the course teaches everything you need to know without expecting you to be a math whiz.

Myth #3: It takes months to learn R. Truth: With the right structure (like this bootcamp), you can go from beginner to confident in just a few weeks of part-time study.

Myth #4: Python is better than R. Truth: R excels in statistics, visualization, and reporting — especially in academia and research.

Learning on Your Own Terms

Another great thing about this course?

You can learn at your own pace.

Pause, rewind, or skip ahead — it’s your journey.

No deadlines, no pressure.

Plus, you’ll gain access to downloadable resources, cheat sheets, and quizzes to reinforce your learning.

Whether you have 20 minutes a day or 2 hours, the course fits into your schedule — not the other way around.

Final Thoughts: The Best Time to Start Is Now

If you’ve been waiting for a sign to start learning data skills — this is it.

The 2025 R Programming Bootcamp for Absolute Beginners is not just a course. It’s a launchpad for your data journey, your career, and your confidence.

You don’t need a background in coding. You don’t need to be a math genius. You just need curiosity, commitment, and a little bit of time.

So go ahead — take that first step. Because in a world where data rules everything, learning R might just be the smartest move you make this year.

0 notes

Text

0 notes

Photo

The US's decision to ban the sale of chip design equipment to China threatens the ongoing trade negotiations and marks a significant shift in tech relations. This move restricts exports of EDA software from major providers like Synopsys, Cadence, and Siemens, pushing Chinese firms to explore domestic alternatives. With restrictions coming into effect soon, China is accelerating efforts to develop its own EDA solutions, potentially leading to more localized chip design innovations. Despite allegations of software piracy, these developments may ultimately benefit China’s semiconductor independence. This change underscores how geopolitical tensions can reshape the global tech ecosystem. Will China’s domestic innovations outpace US restrictions? Stay tuned for updates on the evolving tech landscape. How do you think these restrictions will impact global chip development? #Semiconductors #ChinaTech #TradeWar #EDA #USChinaRelations #TechInnovation #GlobalTrade #ChipDesign #DomesticDevelopment #TechPolicy #FutureOfTech

0 notes

Text

US imposes new rules to curb semiconductor design software sales to China

[TECH AND FINANCIAL] It appears the Trump administration has imposed new export controls on chip design software as it seeks to further undermine China’s ability to make and use advanced AI chips. Siemens EDA, Cadence Design Systems and Synopsys all confirmed that they have received notices from the U.S. Commerce Department about new export controls on electronic automation design (EDA) software…

0 notes